Addressing Deaf or Hard-of-Hearing People in Avatar-Based Mixed Reality Collaboration Systems

Kristoffer Waldow and Arnulph Fuhrmann

In: Proceedings of 27th IEEE Virtual Reality Conference (VR ’20), Atlanta, USA

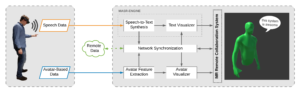

The abstract overview of our MASR-engine (Mixed Reality Audio Speech Recognition) embedded in our previously published MR remote collaboration system. Speech is recorded via the integrated microphone of the HMD and further processed to text, that is sent over the network to be visualized on a remote client. This enables deaf or hard-of-hearing people to participate in MR remote scenarios.

Abstract

Automatic Speech Recognition (ASR) technologies can be used to address people with auditory disabilities by integrating them in an interpersonal communication via textual visualization of speech. Especially in avatar-based Mixed Reality (MR) remote collaboration systems, speech is an important additional modality and allows natural human interaction. Therefore, we propose an easy to integrate ASR and textual visualization extension for an avatar-based MR remote collaboration system that visualizes speech via spatial floating speech bubbles. In a small pilot study, we achieved word accuracy of our extension of 97% by measuring the widely used word error rate.